Recursively Finding and Operating on the Largest n Number of Files of a Particular Type

If you want to reverse-engineer this, you can work from left to right, adding one command at a time to see what each step does to the output.

|

1 |

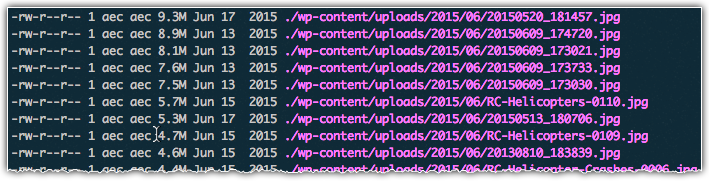

du -ah . | grep -v "/$" | sort -rh | grep "jpg" | head -25 | cut -f 2 | while read -r line ; do ls -alh "$line"; done |

This example just lists the files we found, which is pointless given that’s what we already had before introducing the cut command.

How about a practical example?

Here’s how you can resize the 25 largest jpg files using mogrify (part of ImageMagick) to reduce the quality to 80:

|

1 |

du -ah . | grep -v "/$" | sort -rh | grep "jpg" | head -25 | cut -f 2 | while read -r line ; do mogrify -quality 80 "$line"; done; |

What if you wanted to only grab files that are larger than a specific size? One way to do it is with the find command. For example, find all files greater than 8M:

|

1 |

find . -type f -iname "*.jpg" -size +8M -exec du -ah {} \; | sort -rh | etc... |